The message “Indexed Though Blocked by Robots.txt” means your robots.txt file is blocked from indexing. This error usually appears when your website is down and your robots.txt is modified, such as if you had server problems, malware or virus attacks, etc.

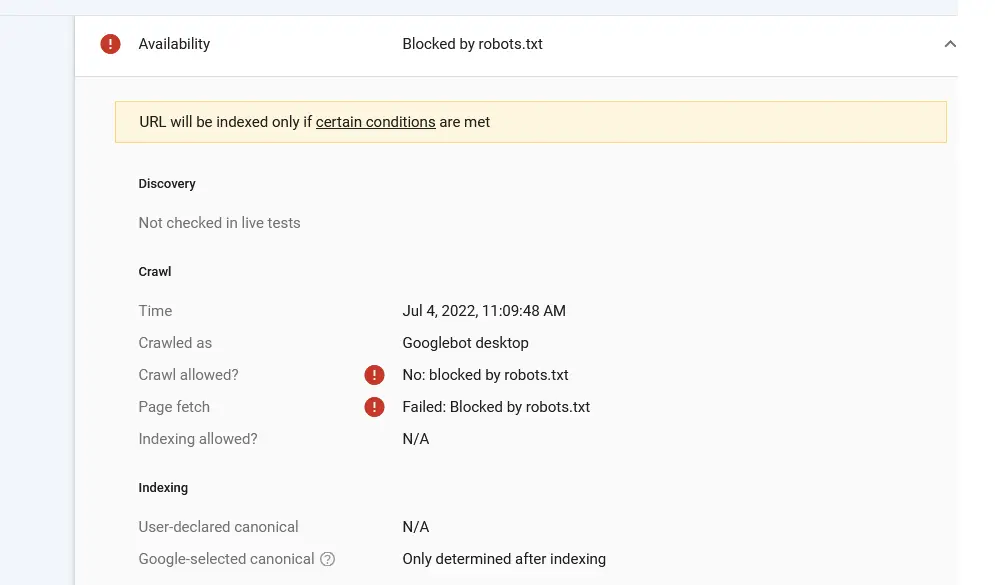

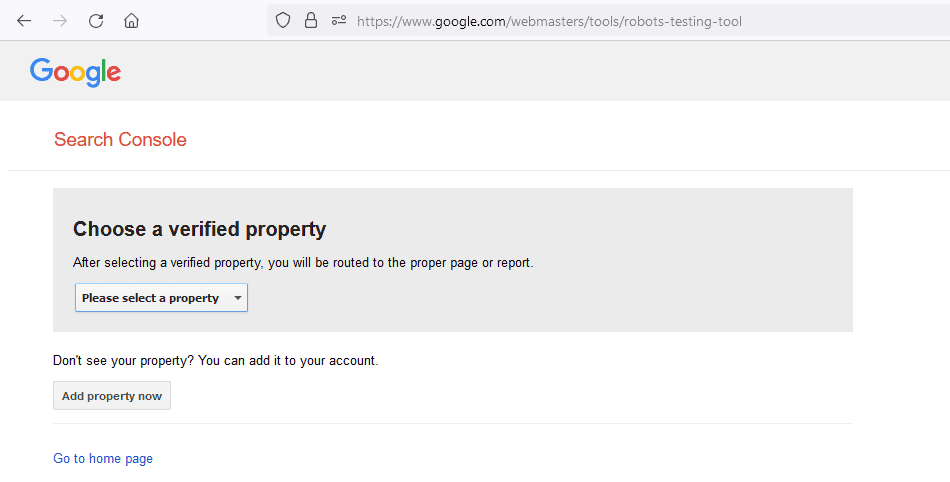

When you test the page in Google Search Console, you will get this:

So this is my story.

My WordPress website broke, and WordPress showed an installation page. When WordPress offers an installation page, you either have a problem with robots.php blocking config.php or the config.php file disappears. In that case, you need to back up the config.php file and robots.txt file, and everything will work again.

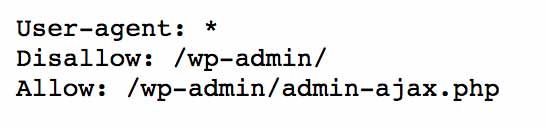

Typical Wodpress robots.txt text is:

Copy below:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

However, I uploaded config.php and robots.txt files, but my website XML sitemap was still blocked. I saw this message: “Indexed Though Blocked by Robots.txt.”

To Fix Error Indexed Through Blocked by Robots.txt, you need to upload a new robots.txt file to your server (WordPress site), and then you need to notify Google that your robots.txt file has been changed. To inform Google about the robots.txt change, go to Google Robots Testing Tool.

I thought that Google would do everything automatically, but my new robots.txt file was on the server for several days, and Google still used the old one.

As I understand, Google caches your robots.txt file. It would help if you told Google that your robots.txt file had been changed.

After I fixed the problem, my sitemap was again allowed for indexing. In the last step, you need to check once if any URLs are blocked by robots.txt.

Verify the address using the URL.

You can check this out by Coverage > Indexed and then viewing a URL listed there, even though robots.txt is banning it. Then, it will state “No: blocked by robots.txt” for the field Crawl authorized and “Failed: Blocked by robots.txt” for the field Page brought under Crawl. Both of these messages will be in the Crawl section.

Google would not have indexed the URLs under normal circumstances; nonetheless, the search engine uncovered connections to the URLs in question and believed them relevant enough to index. There is a good chance that the fragments shown are not the best possible.

How do you check if a page is blocked by robots.txt?

- Export the URL list and categorize it alphabetically in a separate document using Google Search Console.

- Checked all URLs and Determined whether they included or not

- That needs to be indexed in your database. In this case, you must update your robots.txt file to provide Google access to the above URLs.

- It is not your intention to have the exploration locomotive’s right to use. In this situation, the robots.txt file that you use should stay unaltered; however, you should check for any links that should be deleted from the website.

- Search engines may view specific pages, but you do not want them indexed. In this particular circumstance, it would help if you applied robots no index directives and updated your robots.txt file.

- No one at any time should have access to this information. Take, for instance, the concept of a staging environment.

- If it is unclear which portion of your robots.txt is initiating these URLs to be prohibited, pick a URL, and in the right-hand window, click the TEST ROBOTS.TXT BLOCKING button. This will allow you to see which part of your robots.txt is causing the problem. This will cause a new window, showing the line in robots.txt that checks Google from viewing the URL in question.

- When you have made changes, click the VALIDATE FIX button to ask Google to review your robots.txt file about your URLs.

Indexed but banned by WordPress robots.txt modification

The approach for resolving this problem for WordPress sites is the same as in the preceding phases. However, for locating your robots.txt file in WordPress, below are a few tips:

WordPress combined with All-in-One SEO

If you’re using the All in One SEO plugin, change your robots.txt file as shown below:

- Enter your wp-admin credentials.

- Navigate to All in One SEO > Robots.txt in the sidebar.

WordPress plus Rank Mathematics

If you’re using the Rank Math SEO plugin, change your robots.txt file as shown below:

- Log in to your wp-admin area.

- Navigate to Rank Math > General Settings in the sidebar. Click Edit robots.txt.

WordPress combined with Yoast SEO.

If you’re using the Yoast SEO plugin, edit your robots.txt file as shown below:

- Log in to your wp-admin area.

- Navigate to Yoast SEO plugin > Tools on the sidebar.

- Go to file editor.

Pro tip

If you’re functioning on a WordPress site that has not yet gone live and can’t figure out why your robots.txt file includes the following:

User-agent: *

Disallow:

Then, go to Settings > Reading and search for Search Engine Visibility.

If the option Discourage search engines from indexing this site is selected, WordPress will build a robots.txt file that prohibits search bars from visiting the site.

Shopify pages are indexed but are prevented by a robots.txt fix.

The Shopify system does not allow you to accomplish your robots.txt; thus, you’re dealing with a universally applicable one.

You may have comprehended the “Indexed, but banned by robots.txt” message or received an email from Google titled “Innovative index reporting problem found” or a statement in Google Search Console about this issue. In SEO, you should never quit anything unplanned; thus, we advise that you constantly verify which URLs this pertains to.

Examine the URLs to see whether any essential URLs are banned. If so, you have two solutions that involve some effort but enable you to modify your Shopify robots.txt file:

- Configure a reverse proxy

- Use Cloud Flare Workers

Whether or not you should pursue these possibilities rests on the prospective payoff. If it is substantial, consider executing any of these choices.

On Square Space’s platform, you may use the same strategy.

Information That Is Frequently Requested

1) Why does Google show this error warning for each of my pages?

Because robots.txt contains instructions to block access to some sites, Google detected links to those sites. Google will index these pages once it thinks they are significant enough to warrant attention.

2) What steps will you take to address this oversight?

You need to ensure that the websites you need Google to index are accessible to its spiders for them to be indexed. Also, you should avoid creating internal connections to other websites, especially if you do not want such websites to be indexed. The detailed solution may be found in the part of this post titled “How to repair ‘Indexed, but banned by robots.txt.'”

3) Is altering the robots.txt file stored in my WordPress installation feasible?

You may change robots.txt straight from the wp-admin dashboard using a popular SEO plugin like Yoast, Rank Math, or All in One SEO. These plugins are available.

- 6 Proven Ways SaaS Founders Actually Get Customers (With Real Examples) - December 17, 2025

- Facebook Ads to Get Followers! - December 27, 2024

- ClickUp vs. Slack - December 20, 2024